部署三台机器,IP:192.168.110.10 、192.168.110.11 、192.168.110.12 都是24.04的虚拟机

设置 Host Name 更新 hosts 文件

1)登录各个节点,并使用 hostnamectl 命令设置它们各自的主机名。

sudo hostnamectl set-hostname "k8s-master"

sudo hostnamectl set-hostname "k8s-node1"

sudo hostnamectl set-hostname "k8s-node2"

将以下行添加到每个节点上的 /etc/hosts 文件中。

192.168.110.10 k8s-master

192.168.110.11 k8s-node1

192.168.110.12 k8s-node2

配置节点之间通信

ssh-keygen

ssh-copy-id k8s-master

ssh-copy-id k8s-node1

ssh-copy-id k8s-node2

2)禁用 swap,加载内核模块

在每个节点上运行以下命令来禁用交换空间

sudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

sudo grep swap /etc/fstab

sudo swapoff -a

sudo free -h

使用 modprobe 命令加载以下内核模块

sudo modprobe overlay

sudo modprobe br_netfilter

永久加载这些模块,创建以下内容的文件

sudo tee /etc/modules-load.d/k8s.conf <<EOF

overlay

br_netfilter

EOF

3)设置服务器内核参数(所有节点)

cat >> /etc/sysctl.conf <<EOF

vm.swappiness = 0

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

运行以下命令,加载上述内核参数

sysctl -p

配置ipvs

安装 ipvs

sudo apt install -y ipset ipvsadm

内核加载IPVS

cat <<EOF | sudo tee /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

EOF

加载IPVS模块

sudo modprobe ip_vs

sudo modprobe ip_vs_rr

sudo modprobe ip_vs_wrr

sudo modprobe ip_vs_sh

sudo modprobe nf_conntrack

4)节点安装配置 Containerd(所有节点)

Containerd 为 Kubernetes 提供了容器运行

安装容器依赖项

sudo apt install -y curl gnupg2 software-properties-common apt-transport-https ca-certificates

安装docker/containerd,如果使用ctr,就不要安装docker/docker-compose,只安装containerd即可

apt update

apt install -y ca-certificates curl gnupg lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update

apt install docker-ce docker-ce-cli containerd.io docker-compose -y

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": [

"https://docker.mirrors.ustc.edu.cn",

"https://hub-mirror.c.163.com",

"https://reg-mirror.qiniu.com",

"https://registry.docker-cn.com"

],

"exec-opts": ["native.cgroupdriver=systemd"],

"data-root": "/data/docker",

"log-driver": "json-file",

"log-opts": {

"max-size": "20m",

"max-file": "5"

}

}

EOF

systemctl restart docker.service

systemctl enable docker.service

containerd 模式配置方法,使其开始使用 SystemdCgroup,并修改沙箱镜像源

# 生成containetd的配置文件

sudo mkdir -p /etc/containerd/

containerd config default | sudo tee /etc/containerd/config.toml >/dev/null 2>&1

# 修改/etc/containerd/config.toml,修改SystemdCgroup为true

sudo sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/g' /etc/containerd/config.toml

# 检验配置

sudo cat /etc/containerd/config.toml | grep SystemdCgroup

# 修改沙箱镜像源

sudo sed -i "s#registry.k8s.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml

# 检验配置

sudo cat /etc/containerd/config.toml | grep sandbox_image

重启 containerd 服务,使上述更改生效。

systemctl status containerd

systemctl enable containerd

systemctl restart containerd

cri-dockerd模式配置方法:

# 去github下载对应架构的cri-dockerd

https://github.com/Mirantis/cri-dockerd/releases

# 安装

dpkg -i ./cri-dockerd_0.3.14.3-0.ubuntu-jammy_amd64.deb

sed -ri 's@^(.*fd://).*$@\1 --pod-infra-container-image registry.aliyuncs.com/google_containers/pause@' /usr/lib/systemd/system/cri-docker.service

# 重启

systemctl daemon-reload && systemctl restart cri-docker && systemctl enable cri-docker

5)添加 Kubernetes Package Repository

Kubernetes 软件包在 Ubuntu 24.04 的默认包存储库中不可用,所以要先添加它的存储库。在每个节点上安装

注意: 在撰写本文时,Kubernetes 的最新版本是 1.30 所以你可以根据自己的需求选择版本。

使用 curl 命令下载 Kubernetes 包存储库的公共签名密钥

apt-get update && apt-get install -y apt-transport-https

curl -fsSL https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/deb/Release.key |

gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/deb/ /" |

tee /etc/apt/sources.list.d/kubernetes.list

6)安装 Kubernetes 部件 Kubeadm, kubelet, kubectl

sudo apt update

# 安装依赖

sudo apt-get update && sudo apt-get install -y apt-transport-https ca-certificates curl gpg

# 查看版本列表

apt-cache madison kubeadm

sudo apt install kubelet kubeadm kubectl -y

# 锁定版本,不随 apt upgrade 更新

sudo apt-mark hold kubelet kubeadm kubectl

# kubectl命令补全

sudo apt install -y bash-completion

kubectl completion bash | sudo tee /etc/profile.d/kubectl_completion.sh > /dev/null

. /etc/profile.d/kubectl_completion.sh

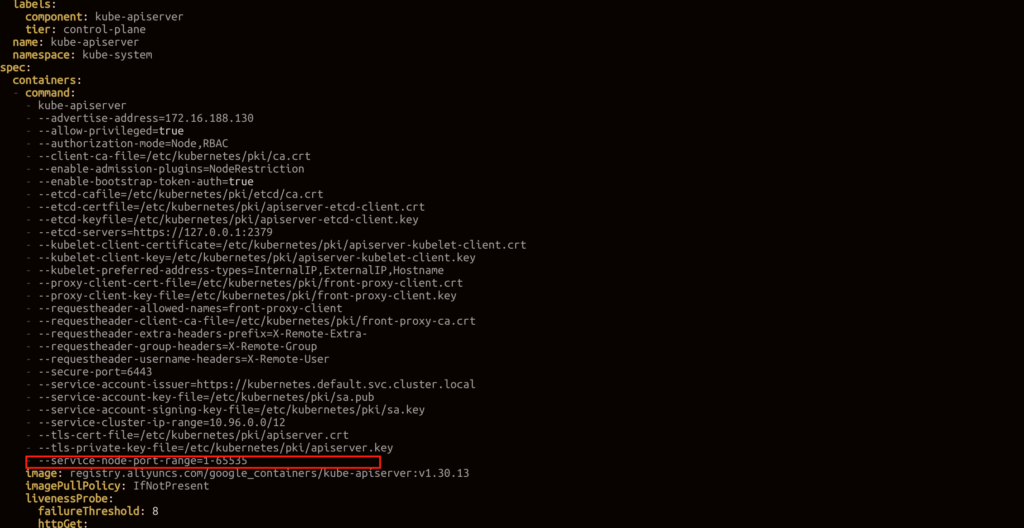

7)初始化Kubernetes Master节点

# 查看需要的镜像

kubeadm config images list

# 提前下载镜像

阿里云:registry.aliyuncs.com/google_containers

阿里云(arm):registry.cn-shenzhen.aliyuncs.com/starbucket

腾讯云(arm):ccr.ccs.tencentyun.com/starbucket

腾讯云(amd):ccr.ccs.tencentyun.com/starbucket_amd

kubeadm config images pull --kubernetes-version=v1.30.13(版本号) --image-repository "镜像仓库地址"

# 直接运行命令初始化集群

sudo kubeadm init --control-plane-endpoint=k8s-master

--image-repository=ccr.ccs.tencentyun.com/starbucket

# 或者自定义配置文件

kubeadm config print init-defaults > kubeadm.yaml

初始成功后,按照提示要求,创建配置文件目录以及复制配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

根据实际的集群参数,加入worker节点

kubeadm join 192.168.140.75:6443 \

--token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:0deaa9ceed7266c28c5f5241ed9efea77c798055ebcc7a27dc03f6c97323c8a0

执行完成,查看节点

kubectl get nodes

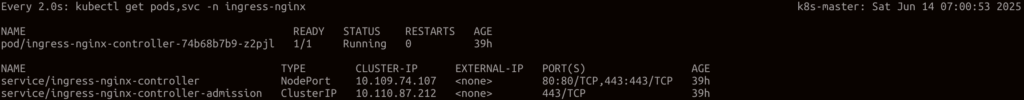

8)Master节点,安装pod网络calico

在线下载配置文件地址:https://resource.obsbothk.com/Soft/K8S/calico/calico.yaml

配置已经我已经修改好了,自己的国内镜像库,不会拉取镜像失败

修改CIDR,将CIDR修改成上面初始化时pod的内部网段

对应项:

–pod-network-cidr=10.244.0.0/16

podSubnet: 10.244.0.0/16

wget https://resource.obsbothk.com/Soft/K8S/calico/calico.yaml

# 创建calico服务

kubectl apply -f calico.yaml

# 观察各服务容器的状态

watch kubectl get pods --all-namespaces -o wide

# 查看node节点网络状态

kubectl get nodes

备注:

部署过程中遇到问题,可在首页选择对应服务,获取站长的帮助,Home – STARBUCKET